| Type |

MA |

| Supervisor |

Prof. Dr.-Ing. Alois Knoll |

| Advisor |

Dr.-Ing. Giorgio Panin, Dipl.-Ing. Claus Lenz |

| Student |

Önder Tato |

| Research Area |

CoTeSys |

| Associated Projects |

ITrackU, JAHIR |

| Programming Language |

C++ |

| Required Skills |

Image Processing, Computer Vision, Computer Graphics |

| Useful Knowledge |

Techniques for face/gaze detection, modeling, tracking |

Description

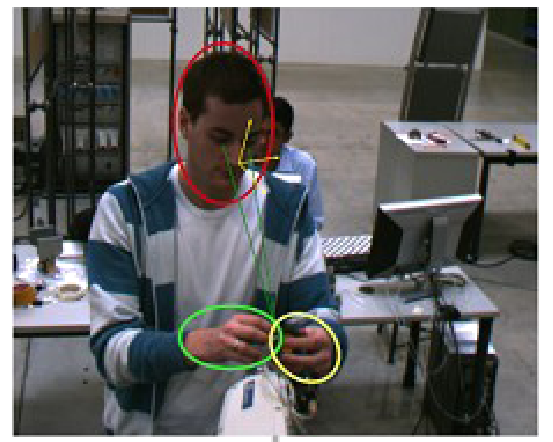

The goal of this project is to obtain the focus-of-attention of a person ("where is he/she looking at?") for Human-Robot interaction in the

JAHIR scenario.

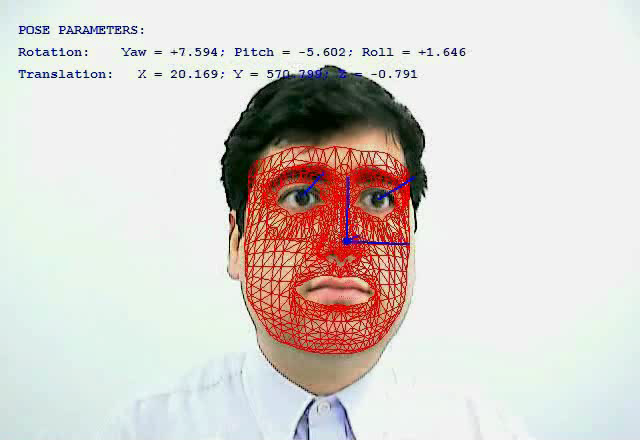

The proposed methodology involves a real-time, 3D face and gaze tracking system, based on a full textured model of the subject. For this purpose, the

OpenTL library of the

ITrackU project will be used.

This model can be acquired from two photos (front and profile view), by adapting a generic head model to the facial features (eyes, nose, lips etc.), and subsequently applying the texture images to the model.

The current implementation of the modeling procedure is fast, and does not require particular user skills. However, it could be made more interactive and reliable.

The project is then articulated in the following milestones:

Main tasks:

- Software:

- Extension of the face tracker to a stereo set-up (or multiple views?)

- Implement point visibility

- Increase robustness (initialization, lightning conditions, adaptation of texture, partial occlusions of the robot,...), precision (resolution in degrees) and speed (the faster, the better)

- Hardware:

- Find best camera configuration

- calibrate cameras

- integrate cameras in the JAHIR demonstrator

- Evaluation:

- Use Beamer in the set-up and known trajectories on the desk to proof the precision

- Comparision with initial version (speed, precision, etc.)

- test is with the robot "in action"

- interfaces:

- implement Ice-Interface to integrate the tracking into the JAHIR-architecture

Location: Garching

Literature

For more information about our activity, please refer to our

Lectures on Model-based Visual Tracking.